This year I attended re:invent in person for the first time. I attended past events online but, despite getting free tickets via the AWS Ambassador programme, was never able to make it in person. This year, Sourced provided budget for flight and accommodation and, of course, five days off to attend. I was joined by my Sourced colleague KangZheng, who became an AWS Ambassador earlier this year.

First weekend

I arrived Saturday, 26th of November, but 8 AM was too early to collect my badge from the airport, so I went straight to my hotel and spent the rest of the day walking around Las Vegas. I soon realised that the scale of this city was very deceiving. What looked like a 10-minute walk in Google Maps was actually half an hour. Blocks here are vastly larger than in Singapore or any other city I have visited, for that matter.

Sunday morning, I walked (35 minutes) to the main event area at the Venetian. The entry and check-in area were impressive, with a massive screen down the entire while behind the check in desks. I got my event pass and some swag.

Sunday evening, we had the ASEAN networking event. I saw several people I had met before at local AWS events, clients, and AWS user groups – including Steve, who runs the AWS user group in Singapore. There was also a large contingent from DBS Bank – one of Sourced’s Singapore clients.

Monday

The session I enjoyed most on Monday was about quantum computing, where the speaker provided a great analogy to help understand how quantum differs from traditional computing.

The analogy used a soap bubble, like when my kids blow bubbles in the park. When they blow soapy water, it becomes a sphere shape. We can use a supercomputer and enter this experiment’s physical details, such as the viscosity of the water-soap solution. It will then take a couple of days using maths to calculate what shape it will become. The result, of course, is a sphere. However, when you blow a bubble, it does not use maths to determine what shape it will become. It follows the laws of physics to simply collapse into the lowest energy state, a sphere.

Traditional computers use maths, which takes a lot of computation for complex problems. Like the soap bubble, Quantum computers use physics to derive a result. This is why they are so fast but also why they only work on the type of problems that can be solved with physics. Good use cases include material sciences and chemistry challenges.

Another speaker went for a more technical deep-dive into the technology. The measurement of the processing capability of quantum computing is a qubit. The more qubits, the more powerful the computer is. Traditional computing linearly grows its processing power. For example, 4 bits provide 4 times the processing power of 1 bit (1 times 4). Qubits grow exponentially, so four qubits provide 24 times the processing power of 1 qubit (2 to the power of 4: 2X2X2X2 = 16).

The challenge is that Qubits are like toddlers. They can’t sit still and want to talk to everyone else in the room. For successful computing, we need the Qubits to stay in their place and only talk to the Qubits we want them to talk to. This is achieved with very low temperatures or specific types of lasers – depending on the quantum machine design.

Quantum computing requires a very different way of working with computers, involving a steep learning curve. While quantum computers are still in very early stages, there is an opportunity for early adopters to get ahead of competitors when they go mainstream.

The service this talk was ultimately promoting was Amazon Braket, which provides access to quantum computing in the cloud. The service supports different types of quantum systems, which is important as different types are better suited to different use cases. Braket is also well integrated with other cloud services, providing the ability to run hybrid workloads with traditional computing managing the data pipeline feeding tasks and retrieving results from the quantum computer. More information about this service can be found here: https://aws.amazon.com/braket/

Monday evening, we had the AWS Ambassador meetup. It was great meeting up with fellow ambassadors from across the globe. Fellow author and ambassador Saurabh Shrivastava was kind enough to give me a signed copy of his book Solutions Architect’s Handbook 2nd edition.

We also managed a group shot of all ambassadors from the Amdocs family that were at re:invent!

Tuesday

AWS ambassadors were on the Cloud Track, which means we had priority seating at the keynotes. Tuesday started with Adam Selipsky’s keynote and many great announcements.

The announcement that most resonated with me was the launch of a serverless version of the Amazon OpenSearch service. I have often wanted to use this service with serverless startups in the past, but the operational cost made it challenging. The minimum operating cost was often higher than the total monthly cost of their serverless platform. I have not yet used this new serverless version, and the pricing seems to be per hour, which does not seem very serverless, so it might be more like Aurora Serverless. If that is the case, it will depend on how predictable the workloads are or how short the cold-start time is. With a short cold-start time, the per-hour fee would only kick in when a user request comes in, enabling us to minimise the fees to actual utilisation. I will need to PoC this at some point to be sure.

Other updates from Adam included:

- A commitment from AWS to operate 100% on renewable energy by 2025

- Continued development towards their zero-ETL vision with fully managed integration between Aurora and Redshift and a redshift-spark integration.

- Several data updates, including the new Security Lake service that enables teams to build a data lake with just a few clicks (hopefully, a few lines of IaC is also an option…). The service automatically collects and aggregates security data from AWS partner solutions such as Cisco, Palo Alto, and others, and you can add your own data sources. DataZone is a new service where teams can securely share and collaborate on data sets. He also talked a bit about QuickSight Q, where data and data predictions can be queried using natural language.

- AWS Supply Chain – another new Serverless service - helps manage supply chains and their data.

- Lastly, Amazon Omics is a new serverless service for the medical industry that helps to analyse genomes and similar data.

One of the customer speakers during his keynote was a company called Spaceperspective.com. They are looking to bring people to space far more affordably using capsules on a special balloon, which they call a carbon-neutral spaceship. However, the trip only brings you to the edge of space (30 km) for two hours and is not quite zero-g. So, I probably won’t be rushing to buy the $125k ticket any time soon.

On a separate note, I quite like the keynote presentation style that all keynotes were using. They introduce a topic with an exciting story unrelated to products/services. Then use components of that story as analogies for various problem statements before introducing the services or features that can help to address those problems. I shall keep this in mind for my own future presentations, where appropriate.

Wednesday

Wednesday started with Swami Sivasubramanian, the vice president of machine learning. There was some repetition of the services that Adam mentioned, but Swami went into far more detail on each.

Updates included:

- Apache spark integration with Athena (serverless SQL query service)

- Document DB elastic scaling, not serverless but at least a bit more fully managed version of the server that will help focus more on value add and less on plumbing.

- SageMaker Geospatial. A new capability within SageMaker to process geospatial data such as maps, satellite imagery, location data, mining surveys, etc. While this is probably not that relevant to Sourced, my background in the Maritime & Energy industries got me very excited about this service, and I mentioned it to a few ex-colleagues.

- Redshift multi-AZ. While Redshift was able to fail over to another AZ, this could still disrupt operations. Proper multi-AZ support offers a big win for availability.

- Trusted Language extensions for PostgreSQL (RDS & Aurora). Makes it easier to build high-performance extensions that can run safely on these cloud services.

- Guard Duty now offers protection of RDS databases

- Glue has a new feature called Data Quality that can monitor data lakes and provide alerts of any perceived quality concerns of the contained data.

- Redshift data sharing now supports central access control (with AWS Lake formation).

- SageMaker ML Governance for improved control over data access permissions and sharing.

- Redshift now offers the capability to automatically copy data from S3 buckets (part of the zero-ETL vision).

- Lastly, many new data connectors were announced for AppFlow and SageMaker data wrangler. This seems to be another development towards the zero-ETL vision, making it easier to pull in data from all kinds of sources without needing to manually code the integration or any conversions that may be needed.

Wednesday evening with the partner connection. A dinner for AWS partners from APAC. Chatted with a few people from competing consulting agencies and with the AWS head of APJ partnerships. The dinner was excellent, with what I think was the largest steak I have ever eaten on my own.

Thursday

Thursday was the keynote that I was most looking forward to: Werner Vogels, CTO of Amazon. And it seemed I was not alone in that excitement; the room was absolutely packed. Luckily, I had gotten there early and was 3rd in line when the doors opened, so I had an excellent seat near the front.

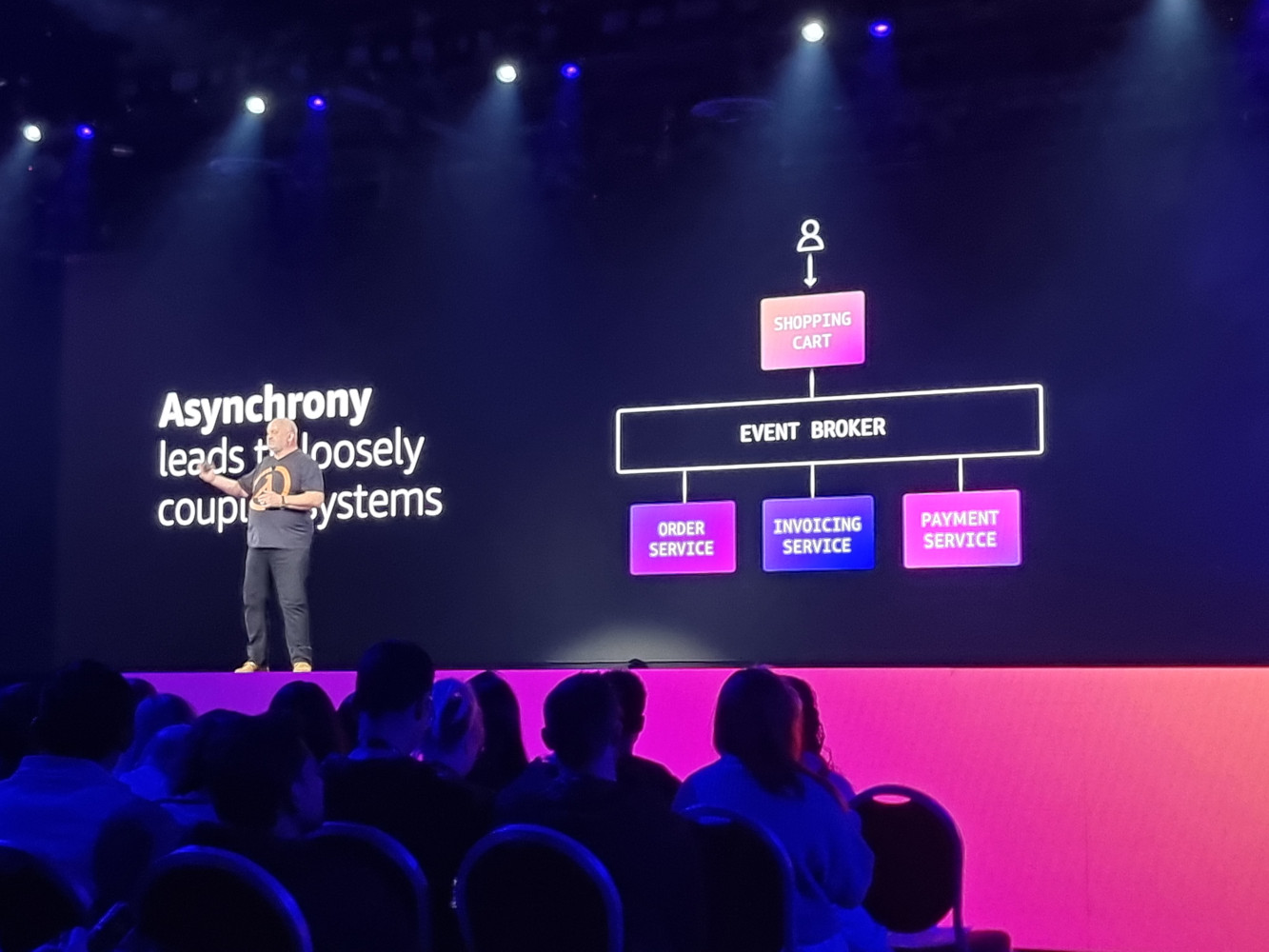

It kicked off with a matrix-like intro video featuring the man himself, with a good dash of dry humour. The main topic was that life is not synchronous, so the cloud and applications should not be either.

Werner’s keynote had a heavy focus on serverless. However, this year it went much deeper. Not just general serverless services but into some of the foundational design approaches to serverless such as event-driven and asynchronicity. It was great to hear, especially as many of his comments aligned strongly with my serverless book. For example, in the context of event-driven architecture, at one point, he noted that “Service A doesn’t need to know that service B exists”. This is very similar to this line from my book’s Stateless architecture section: “An invoice microservice does not need to care about the previous or next step”.

His announcements included:

- Serverless map reduce capability as a new feature in StepFunctions

- The launch of a new service called AWS Application Composer. It is intended to make designing multi-component serverless applications more accessible for people new to serverless. I’m unsure how relevant this is to the industries I currently work in, or if this is necessarily the best way to approach serverless architecture design. However, it will certainly lower the barrier to entry and get more people experiencing and experimenting with this architecture, which is certainly a win.

- EventBridge Pipes is a new capability that can help link multiple services together, including filtering data sets. Another zero-ETL achievement.

- Trust Pilot was a guest speaker, showing quite an impressive architecture that is entirely serverless, fully event-driven and operating on a global scale.

- Code Catalyst is a new developer workflow and life cycle management service that includes pipelines, tools, integrations, and blueprints. ). I wonder if this can be used in regulated industries - the blueprint option seems similar to how we secure the cloud while enabling developers.

- Werner also had a focus on 3D. For example, he noted that AWS has been Investing in an open-source 3D engine project called O3DE (www.o3de.org). A cloud-optimised 3D engine that runs in common internet browsers.

- There was a case study from Matterport – a company specialising in devices for capturing 3D spaces – where they combined their capabilities with the AWS IoT services to show real-time IoT data in a 3D space that replicated the actual space. It was quite a good user experience to see the devices and data represented this way.

- Epic games presented some of the things they were working on with the Unreal engine combined with the cloud. Their character builder MetaHuman runs on AWS using GPU instances, for example.

- Werner announced a new 3D service called SimSpace weaver. It is for building very large-scale spatial simulations that can be calculated using a fleet of powerful instances. The results can then be output into one of the supported 3D engines.

The focus on serverless may prove excellent timing with the 2nd edition of my serverless book that we will be out soon. The 3D capabilities, while less relevant to Sourced, I do find personally quite exciting.

Thursday afternoon, I volunteered for Amazon Partner Network Booth duty. Being almost the end of the week, and people were likely getting ready for re:play, it was not very busy. AWS wanted to get rid of the remaining t-shirts (mostly XL and XXL sizes), so I aggressively handed them out to passers-by. I must have handed out over 100 T-shirts in the 2 hours I was on duty. Although interactions were short, I did get to talk to a lot of people.

Thursday night was re:play. I had not been to a party like this in almost 20 years. I got there quite early, so I was able to grab some food without waiting in line too much. There were several stalls offering different stacks like sliders, hotdogs, and pulled pork sandwiches.

I then proceeded to the EDM stage; as with the food, it wasn’t that busy yet, so I could easily make my way to the front. There were three acts. A trio of ladies, followed by two guys, and finally, the headliner Martin Garrix – who is supposedly well known, but I haven’t kept track of popular DJs for the last decade, so I had never heard of him. As the night progressed, it became more and more packed at the front. By 23:30, I had had enough of being squished, and I was tired (old), so I headed out. To my surprise, it was packed the entire way out of the venue; it took 15 minutes to get through the crowd to the exit - must have been 20,000+ people behind me. Having left slightly before the end, I was able to get a shuttle right away without queuing and got back to the hotel by midnight.

Friday

The final re:invent day was very quiet. There were only a few sessions in the morning, and I attended one on the topic of privacy.

They covered some of the new guardrails available in Control Tower that are relevant to privacy. They also mentioned a service called AWS Signer which seems quite useful and something I will consider adding to our serverless accelerator.

After the morning sessions, I did some shopping for the family and had an early steak dinner.

Saturday

Saturday was time to check out and start on the long flight home. To wrap up the week, I booked a Tesla to the airport, which seemed fitting. (They are still quite rare in Singapore).

While waiting at the airport and in transit, I reviewed my notes and photos and started working on this article. Hopefully, I will be back next year with a speaking slot to talk about modern cloud management strategies for regulated enterprises!