Co-authored with KangZheng Li

Cost-Optimisation and related updates

Amazon S3 announces a price reduction up to 31% in three storage classes

Another cost reduction in a line of over 11 cost reductions has made by AWS to the S3 service since it was launched. Still, it pays to plan storage carefully as there are some easily missed costs.

Factors to take note of are the minimum billable size, the minimum days of storage and the additional storage overhead across the different storage options.

For example, files that are stored in Infrequent Access, are charged for a minimum of 128KB per object. So, a single zipped 10 MB file, will be cheaper than 1000 individual 10 KB files, even though the total stored amount is the same.

For Glacier Flexible Retrieval and Deep Archive, an additional 40KB is added per object and minimum 90 days of storage for Glacier and 180 days for Deep Archive is billable.

Similarly, we should not forget requests costs in S3. The action of storing something has a one-time cost associated with it on top of the recurring storage cost. Here too, it can be cost-effective to merge or zip files before uploading.

For example, 10 million 5KB files, which can be a common use case for log or IoT data archiving. The total storage size would be 50GB, costing $1.25 in the Singapore region. However, 10 million upload actions, or ‘put requests’, would cost $50 in the Singapore region.

If we zipped or merged that data into fifty 1GB files, the storage cost would be the same as it is still 50GB total, but the ‘put requests’ cost would less than one tenth of a cent.

https://aws.amazon.com/about-aws/whats-new/2021/11/amazon-s3-price-reduction-storage-classes/

Announcing the new Amazon S3 Glacier Instant Retrieval storage class - the lowest cost archive storage with milliseconds retrieval

This update is not as crazy as it sounds given the trend of reducing retrieval time on Glacier over the last few years, possibly related to technology advancements in AWS data centres. In this case, it seems like a merging of Glacier and the infrequent access tier which does offer instant retrieval. Unlike the other Glacier options, the new Glacier Instant Access Tier has a minimum storage size of 128KB per object, the same minimum storage size as the infrequent access tiers.

Announcing the new S3 Intelligent-Tiering Archive Instant Access tier - Automatically save up to 68% on storage costs/

This is clearly due to the new “Glacier Instant Retrieval” option above. This will further help to automate cost-optimisation without retrieval times becoming an issue for any applications sitting in front of the storage service.

Amazon DynamoDB announces the new Amazon DynamoDB Standard-Infrequent Access table class, which helps you reduce your DynamoDB costs by up to 60 percent

This is awesome news for Serverless applications heavily using DynamoDB. Typically, given the storage cost of DynamoDB, we would have to expire stale data using the Time-To-Live feature. If we needed to retain a copy, then we would need to architect a solution using DDB streams to archive a copy of the data into s3 before it was deleted from DynamoDB.

While the new pricing is less than half the cost of regular DynamoDB storage, it is still no match for S3 pricing, the following is in the Singapore region.

| DynamoDB Standard Storage | $0.285/GB |

| DynamoDB Standard IA Storage | $0.114/GB |

| S3 Standard | $0.025/GB |

| S3 Standard Infrequent Access | $0.0138/GB |

| S3 One Zone Infrequent Access | $0.011/GB |

| S3 Glacier Instant Retrieval | $0.0045/GB |

Nevertheless, this 60% discount may present an opportunity to keep data slightly longer in DynamoDB for use cases where that can add value. For example, dashboards with slightly longer historical data ranges.

However, do keep in mind that the throughput cost of this table class is up to 20% higher, both for provisioned and on-demand billing modes. So, storage cost is lower, but actions are more expensive.

New Serverless modes

Amazon Kinesis Data Streams On-Demand – Stream Data at Scale Without Managing Capacity

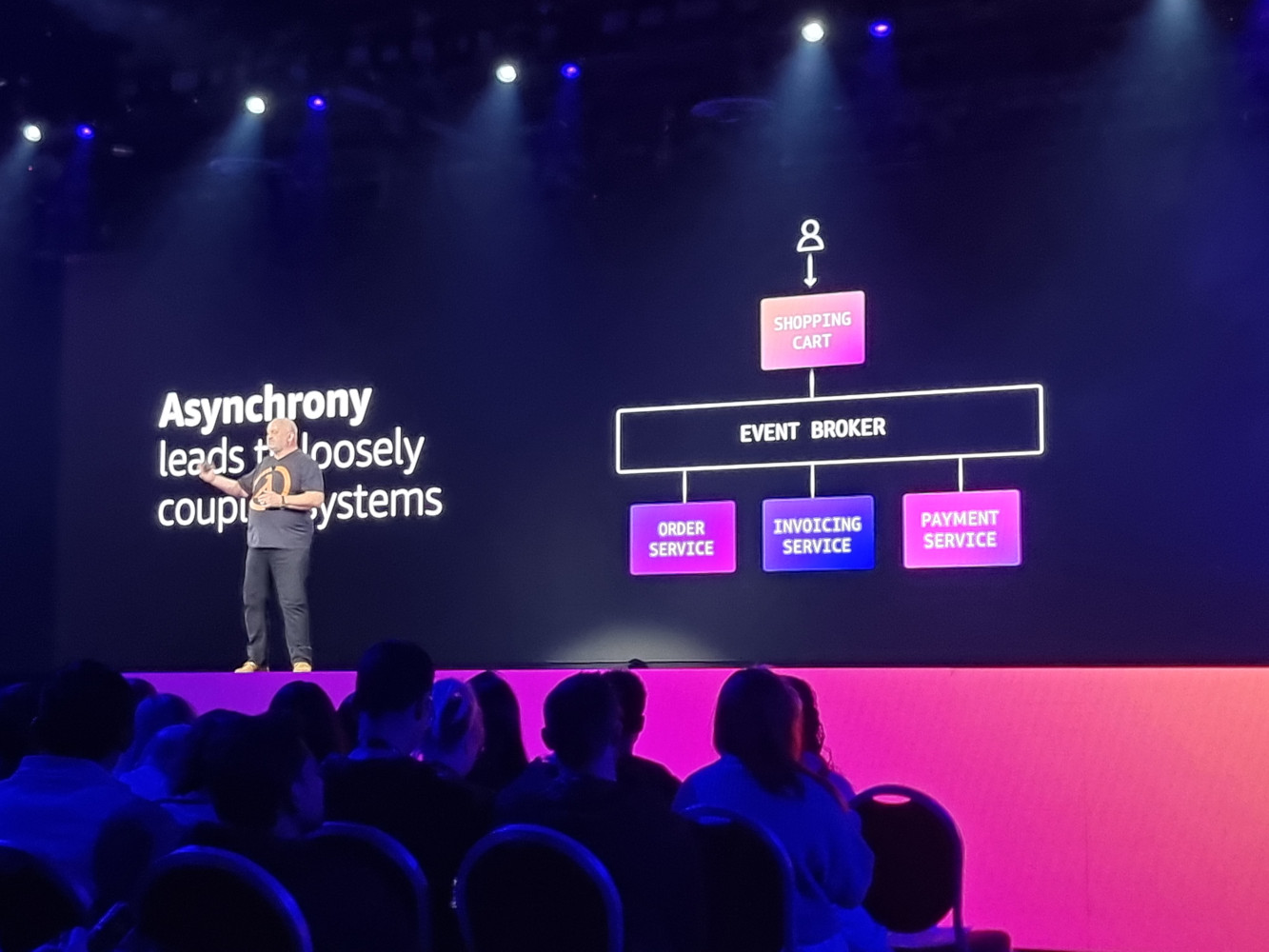

Not entirely unexpected as there has been a trend with services being made more Serverless. We have seen similar uplifts with DynamoDB and, of course, Aurora Serverless. This will be a welcome update for cost optimisation and minimising overheads in use cases such as IoT data ingestion where incoming data might not be consistent, resulting in billable idle time with the provisioned Kinesis service.

With this update we no longer need to estimate, manage and pay for Kinesis shards. We simply start using the service and it will scale to meet our workload billing us for the actual usage. Like DynamoDB on-demand, there is likely a break-even point where it makes financial sense to switch to provisioned mode if the workload is sufficiently consistent.

Introducing Amazon SageMaker Serverless Inference (preview)

This is huge. Data analytics is more and more often a component in web applications and platforms. While some models can be run in Lambda, or even on-demand in Fargate, many models require a GPU, which is currently not available in either of those options. This meant that an otherwise Serverless platform had to resort to servers to power their ML models, making an event-driven model very difficult given the delay to boot a server, and resulting in billable idle time, maintenance and security overheads. With this update, we can now do fully Serverless hosting of ML models.

https://aws.amazon.com/about-aws/whats-new/2021/12/amazon-sagemaker-serverless-inference/

Introducing Amazon Redshift Serverless – Run Analytics At Any Scale Without Having to Manage Data Warehouse Infrastructure

Aligned with the trend of making existing services more Serverless, this update was long overdue, and I have been waiting for this for quite some time.

I can now put to rest my habit of pointing out that Google cloud has BigQuery - essentially a Serverless Redshift, whenever I talk about data warehousing capabilities on AWS.

Amazon EMR

Introducing Amazon EMR Serverless in preview

Like Serverless Redshift, this is another service long overdue a Serverless upgrade.

I expect that, similar to other services that have both billing models, the Serverless model is typically intended for lower-volume and sporadic workloads. As such, make sure to do a cost comparison and calculate the break-even point when it might make financial sense to switch from Serverless to provisioned billing.

https://aws.amazon.com/about-aws/whats-new/2021/11/amazon-emr-serverless-preview/

Amazon MSK Serverless

Introducing Amazon MSK Serverless in public preview

And the final ‘new Serverless mode’ update this RE:Invent, is for the MSK service – Managed Streaming for Kafka. I have not used MSK myself so can’t comment too much in this other than note the Serverless update. My past use cases were all able to be addressed with SQS or Kinesis, so I’m guessing MSK mostly exists for those that want something a bit less proprietary to AWS.

https://aws.amazon.com/about-aws/whats-new/2021/11/amazon-msk-serverless-public-preview/

New or Changed Features and Capabilities

Amazon S3 Event Notifications with Amazon EventBridge help you build advanced serverless applications faster

This could be a great benefit for cost management and, in extreme cases, for reducing the risk of hitting Lambda concurrency limits as we have more powerful filtering capabilities to reduce the amount of unnecessary Lambda invocations. An added advantage is that these events include class-change events, so now we can respond to those too, very useful for tracking costs, for example.

Amazon SQS Enhances Dead-letter Queue Management Experience For Standard Queues

This is a great update for event-driven architectures where we use DLQ’s to catch failed events from SQS, SNS or Lambda. DLQ’s provide a means to troubleshoot such issues, and there were ways to get such failed events back into processing, but this update makes that considerably more easy to achieve.

Amazon Athena now supports new Lake Formation fine-grained security and reliable table features

Athena features frequently in Serverless data lake designs so it’s great to see improvements made to this service. This update improves security, specifically the Principle of Least Privilege. Tightening up the fine-grained permissions we can set for users accessing our data, including defining column, row and cell-level permissions.

<h3<Announcing Amazon Athena ACID transactions, powered by Apache Iceberg (Preview)

The second update announced to Athena is support for ACID transactions on data stored in S3. It is currently in Preview, but this news will be well received in regulated industries that often have a regulatory requirement to use ACID-compliant databases for particular data sets.

https://aws.amazon.com/about-aws/whats-new/2021/11/amazon-athena-acid-apache-iceberg/

AWS Cloud Development Kit (CDK) Version 2

AWS Cloud Development Kit (AWS CDK) v2 is now generally available

Super excited about this update! I’m a huge fan of CDK and how it provides both flexibility and ease-of-use for developers to provision cloud architecture. Some key updates in v2 that I’m looking forward to exploring are:

- Only stable APIs in the core version, experimental features are maintained separately, unlike version 1 where new, unstable, updates could break things forcing a downgrade until they were resolved.

- Integration with AWS Construct Library, which eliminates the need to download individual packages for each AWS service

- Disable automatic stack rollback for easier troubleshooting when your deployment fails – a standard feature in CloudFormation but had not been made available yet in CDK.

- CDK watch (for development, not recommended for production) can continuously monitor for any code changes to your application or infrastructure and immediately perform deployment of the stack if any change is detected. An interesting extension of a framework into reactive cloud controls.

- Inclusion of the new assertion library to run unit tests on your CDK stack

Construct Hub

AWS announces Construct Hub general availability

Also related to CDK, the new Construct Hub is a centralised community-driven registry for publishing CDK construct libraries. Looking forward to seeing the type and quality of blueprints that will be released here. This could help accelerate organisations Serverless-adoption efforts, so adherence to quality, security and other best-practices will be quite important to make this a trustworthy repository.

https://aws.amazon.com/about-aws/whats-new/2021/12/aws-construct-hub-availability/

Introducing Amazon CloudWatch Metrics Insights (Preview)

Logging, and especially automated reacting to logging, is a key to success for Serverless solutions. Being able to do more powerful queries on logs using SQL could help with that, so I’m looking forward to experimenting with this.

https://aws.amazon.com/about-aws/whats-new/2021/11/amazon-cloudwatch-metrics-insights-preview/

Introducing Amazon CloudWatch Evidently for feature experimentation and safer launches

This is an approach to canary deployments, which is already well supported in Lambda and API Gateway so I don’t have any immediate Serverless use cases for this in mind. Still, any method to manage deployment risk is welcome, certainly if it can drive automation.

Introducing Amazon CloudWatch RUM for monitoring applications’ client-side performance

Since joining sourced I have been a lot less active in front-end applications. This update totally makes sense as front-end logs was one of the last remaining missing areas of logging in CloudWatch.

With this added, we can now have true end-to-end logs to go with our end-to-end tests, showing activity in every step of a request flow.

When implemented correctly, I think this will greatly improve testing productivity, helping to zoom in on the root cause significantly faster.

AWS Well-Architected Framework

New Sustainability Pillar for the AWS Well-Architected Framework

The Well Architected Framework now has a new pillar, besides Operational Excellence, Security, Reliability, Performance Efficiency, and Cost Optimization. This is especially relevant to Serverless. As I have pointed out many times before, less wastage or idle time does not only mean less cost, it also means less electricity so less carbon footprint for those organisations looking to be more green.

Cover image for this article taken from the re:Invent website